IBM's stealth XIV announcement

From Wikibon

Storage Peer Incite: Notes from Wikibon’s August 26, 2008 Research Meeting

Moderator: David Vellante & Analyst: Josh Krischer

Earlier this month IBM announced XIV version 2. Or did it? Certainly it was a strange, incomplete announcement that raised at least as many questions as it answered. Certainly XIV, which has continued to operate as an independent small storage vendor since IBM purchased it late in 2007, has introduced its next generation product. But before IBM can add XIV as an offering, it needs to train its sales and support staff, organize manufacturing and distribution, and define where XIV fits in IBM's overall stable of storage products, among other things. None of that was present in the August announcement. In fact, at least one prominent Wikibon community member believes that the announcement was a mistake. IBM usually does not do major product announcements in the summer, and someone may have slipped up and released the announcement ahead of schedule. Another suggested that IBM may be making a series of pre-announcements this summer, leading to a major announcement of several new product versions in September.

Whatever this announcement portends, there is no doubt that complete details were were sent out at least to IBM channel partners. There is also no doubt that XIV captures the attention of the customer and vendor community as this Peer Incite, our second on XIV, was the most well-attended Wikibon Peer Incite since we started these meetings back in early 2007. Combined, the two XIV Peer Incites were attended by nearly 200 attendees.

This issue of the Wikibon Peer Incite newsletter explores XIV's implications based on the community's discussion last Tuesday and its place in IBM's product strategy. G. Berton Latamore

Contents |

XIV August 2008: The jury's still out

David Vellante with Josh Krischer

Late in 2007, IBM acquired Israeli-based XIV, a small company headed by the father of EMC’s Symmetrix, storage industry technology icon Moshe Yanai. At the time, IBM touted XIV as appropriate for so-called cloud computing and Internet applications, positioning XIV as incremental to products in IBM’s storage portfolio. test

At the time of the announcement, the Wikibon community consensus was that while the XIV acquisition was intriguing, it wasn’t cause for any immediate customer action (see IBM's Acquisition of XIV) and did not in fact appear to be well suited for the cloud. Rather, the community felt it would be positioned as a general purpose system, directly competing for large segments of data center storage that did not require the very highest performance. Furthermore, the community’s analysis suggested that XIV was important as its technology offered many attributes of emerging architectures, including virtualization and thin provisioning, combined with a methodology to spread data across all the drives in an array, in a manner reminiscent of 3PAR or even the Google File System, all while using standard off-the-shelf components.

The most important aspect of the announcement at the time however was that XIV was now part of IBM’s enormous franchise. Placing an innovative product line in IBM’s portfolio was newsworthy and potentially significant.

Earlier this month, perhaps mistakenly, IBM unveiled certain details of version 2 of XIV on its Web site. Also, reports from the field suggest that IBM has formed a special ‘Tiger Team,’ in part comprised of former EMC sales reps, to secure early customers beyond XIV’s initial customer base, which included several banks in Israel. Announcement activity has primarily been concentrated in Europe with virtually no comments from IBM’s U.S. storage groups.

What is unique about XIV?

Several attributes of IBM’s XIV were made clearer with this quiet release, including:

- XIV is the Model-T of storage arrays and currently comes in one flavor only; a 180TB mirrored configuration offering users approximately 90TB of storage. The system is always shipped fully populated. The only option seems to be the power cord.

- The array is comprised of standard components – 1TB SATA drives, processors, cache, Ethernet cards, FC, and iSCSI ports are all off-the-shelf.

- The XIV architecture is fully virtualized, supports thin provisioning and is optimized for extremely high capacity utilization, super low cost, and simplicity. Each volume is split into 1GB pages, and data is spread across all devices in the array. The system is designed for ease-of-use and is self-tuning, similar to 3PAR’s approach.

What’s missing from the XIV’s approach?

- Since all drives are used to read and write volumes, mixing different drive capacities within a single XIV rack is not supported and doesn’t make sense, making tiered storage an unlikely feature.

- Tier 0 (using flash) is not supported and also doesn’t make sense with the current architecture.

- Spindown is also not supported and doesn’t make sense since I/O’s are evenly distributed across all drives.

- All 180 drives must be used. Configurations smaller than 180TB (mirrored) are only supported by allowing users to use less capacity on each disk drive, with the proviso of locking customers in to future capacity increments.

- Asynchronous remote copy is an apparent gap in the offering.

Clustering between racks will allow XIV to address these shortcomings, expanding configuration options and enabling the mixing of racks with different drive performance characteristics.

How energy efficient is XIV?

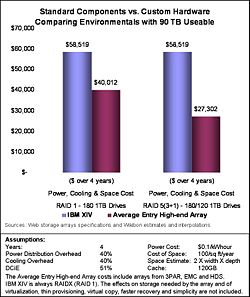

A simplistic analysis of energy efficiency suggests that because of the need to fully mirror, its ‘brute force’ use of standard components, and its UPS approach, the environmental profile of XIV appears substantially less attractive than that of competitive offerings. A like-to-like comparison using similar cache and drive capacities suggests that the four-year environmental costs (power, cooling, space) of a mirrored XIV (180TB raw) approach $59,000 while the industry average for competitive mirrored products is around $40,000. Using RAID 5 would lower the competitive costs to around $27,000. Because XIV does not support mirroring, a like-to-like comparison cannot be made here.

The caveat of this analysis is the degree to which the expected higher utilization of XIV will offset some of these disadvantages. However, customers should not purchase XIV solely for its environmental characteristics.

How can customers exploit such an architecture?

The Wikibon community sees four main opportunities for customers to take advantage of approaches typified by XIV:

- By utilizing XIV to perform near CDP.

- By driving increased simplicity. Because XIV spreads data across all devices and manages performance automatically, customers’ performance tuning tasks will be much simpler.

- By carving out the ‘fat middle.’ By exploiting high utilization and low cost components, it would seem XIV is best positioned below Tier 1A and above archiving.

- By negotiating hard for a lower purchase price. XIV is designed to be inexpensive, with reports of $3-$4/GB raw. This is considerably lower than most purpose-built arrays although higher than products such as EMC’s Hulk and HP’s Extreme storage, which don’t have the same function as XIV. Thus IBM is in the position to undercut the competition in its class.

What does this announcement mean for competitors?

In the near term, the product lacks scalability and has the usual inherent new design risks. The Wikibon community believes clustering will make or break XIV, and if IBM can get this capability to work, it will have a fantastic product.

Internally to IBM this product could cause immense chaos. It appears that XIV could be positioned to replace midrange products as well as IBM’s DS8000 and DS4000 over time. Longer term, XIV’s spread-everywhere approach and simple storage management bear watching and, along with architectures from the likes of 3PAR, Compellent, Pillar, and others, will collectively pressure users to carefully consider alternatives to traditional storage management approaches.

The economics of XIV also bear watching as it represents perhaps the most cost-effective general purpose architecture we’ve seen to date.

Action Item: With XIV, IBM is signaling that it has an increased appetite for storage R&D. To make its investments pay off, IBM is combining a low-cost, volume commodity approach with an innovative architecture that is aimed at the sweet spot of the data center. Unfortunately it’s not quite ready for prime time. Users should kick the tires of XIV to determine the degree to which automated performance management will change storage administration best practice. The bottom line on XIV is it legitimizes fully virtualized architectures. The question users should answer on XIV is do the lower acquisition costs and simpler management attributes warrant the substantial migration costs and risks of moving from existing platforms.

The IBM XIV Storage System Model A14

Josh Krischer, Josh Krischer & Associates

Economy in price and operation are the driving concepts behind XIV. As a result, its designers focused on operational simplicity and use of standard, off-the-shelf components to create a system that is not absolute top end but rather is good enough to support the vast majority of enterprise data storage needs. Traditional enterprise high-end storage architectures are switched matrix with host and device adapters, such as the DMX from EMC and Hitachi’s USP, or tightly coupled (CMP), such as IBM DS8000. Most traditional mid-range systems use dual controllers with mirrored cache, for example EMC’s CLARiiON, Hitachi’s AMS, HP’s EVA, Dell’s EqualLogic PS series, etc. In the last few years a new breed of block-based clustered storage systems design has been deployed such as 3PAR's InServ, LeftHand Networks SAN/IQ, NEC's HYDRAstore, Sun's Fire X4500, and XIV (IBM).

The IBM XIV Storage System Model A14

In contrast, the IBM XIV is a cluster-based array of storage devices with “raw” capacity of 180 TByte (usable 82.5 Tbyte), 24 ports of 4Gb Fibre Channel host connectivity and six ports of 1Gb iSCSI connectivity. The system is built from SATA disks. However the internal design sophisticated cache algorithms allow performance comparable to high-end systems built with FC disks.

Rack Structure Rel.1 (the initial models of Nextra sold by XIV)

- Each rack: 3 power supply USPs and 11 slots (e.g. 3 interface modules, 8 Data modules as standard), 2 Ethernet switch modules (The interface modules provide host connections)

- x86 Industry standard modules (modified Linux) , 4 GB cache and 15 slots for 1 TB SATA drives

- 1GbE in Rack, 10 GbE between racks (Infiniband in the future versions)

Rack Structure Rel.2 (IBM)

- Each rack: 3 power supply USPs and 15, 2U slots. The modules are unified data and interfaces.

- x86 Industry standard modules (modified Linux) , 8 GB cache and 12 slots for 1 TB SATA drives

- 1GbE in Rack, 10 GbE between racks (Infiniband in the future versions). The subsystem is designed to support up to 7 additional modules with a raw capacity of up to 1440 TByte.

- Multiple 1Gbps Ethernet connections between the modules

- In module interface 2x PCI-X with 8 GBps

Concept and data structure

The system is completely virtualized. The data is divided in 1Mbyte chunks, which are written on all disks in the system. To provide redundancy, each chunk is written twice on different modules, which ensures cache redundancy for write data. Each data module keeps some ‘primary’ and some ‘secondary’ blocks. Adding new modules will cause a proportional part of the data to be copied on them. A module failure will cause a reversed action; the “lost” chunks will be copied from the redundant copy on the remaining modules. These operations are completely automated and transparent to the users and the storage administrator. “Spreading” the data uniformly on all installed disks avoids creation of “hot spots” and ensures quasi-constant response times, which is very important for interactive applications.

The cache is managed in 4Kbyte blocks, which is ideal for databases and interactive operations. A random “no hit” read will transfer 64 Kbytes from disk to cache, with sequential accesses up to 1MByte.

Functionality

XIV supports advanced functions such as synchronous remote mirroring, thin provisioning, and writable snapshot technology. It supports up to 16,000 snapshots, which can be created in about 150 msec with a single click or command, regardless of volume size or system size. The “clones” can be read only or read/write. The snapshots can be gathered in consistency groups and managed as a group. It uses Redirect-on-Write instead of Copy-on-Write (as in the original Iceberg from STK, aka RVA, SVA) which requires only two seek operations (read, write) per write. The CoW requires three operations (read, write, write). RoW causes less write overhead, but a snapshot deletion or automatic expiration creates an overhead penalty as the data in the snapshot location must be reconciled back into the original volume. The huge number of the snapshots and their fast creation may be very useful for Continuous Data Protection (CDP) deployments.

Availability

XIV redundancy is designed on the system level and not the component level. The even load distribution on all drives improves SATA drives MTBF. The three power units provide N+1 power supplies with double-conversion (AC to DC to AC), eliminating power spikes, and provides batteries for 15 minutes up time. All the modules are connected by redundant Ethernet switches. The system continuously logs events and statistical data. As a background operation it automatically performs scrubbing and drives monitoring. In a disk failure, the rebuild is done only to allocated and written data (not to the full HDD capacity). Because the data is spread on all modules, all modules also participate in rebuild, which decrease the time to approximately 30 minutes for a 1TByte disk. A typical time to recover data module will take ca. 3-4h.

Storage Management

The key word here is simplicity! To create a new volume, the users define name and size in Gbytes or Tbytes. There is no need to create RAID groups, to decide on layout or to set configuration. The management is done through a GUI or by CLI. When an “event” occurs, the box notifies the manager by SNMP, e-mail, or SMS. Because the data is uniformly distributed among all installed disks, there is no need for performance tuning.

What is missing to be “real” enterprise?

One of the definitions of enterprise high-end storage is mainframe support. XIV doesn’t support the Count Key Data (CKD) mainframe format; therefore cannot be included in this group. It supports synchronous remote copy via FC or IP, but it is not PPRC v.4 compatible, and its asynchronous technique is “statement of directions” only. The current granularity is poor and it is not supported yet by the IBM System Storage Productivity Center (SSPC) SSPC. The 24 FC are significant less than any enterprise high-end system but much more that most in the mid-range.

Future Developments

Most of these points could be developed for future versions, depending on how IBM plans how to position the XIV within its storage portfolio. An interesting opportunity could be the integration of Diligent ProtecTier with XIV. This should not be a very complicated issue; Diligent uses Linux, and both design centers are based in Tel-Aviv, only few miles apart. An integrated system, with possible smaller cache can be an ideal backup and archiving solution for large enterprises.

References

XIV sold 40 systems before being acquired by IBM, 35 in Israel and five in the United States. Most of the customers in Israel are tier 1 corporations and include one of the country's two largest banks and the leading telco. Since the acquisition, XIV has continued to sell the box under own nomenclature, almost doubling the installed base. The first customer, Bank Leumi of Israel, currently has eight systems; this customer used (and still uses) high-end storage from EMC, HDS and IBM.

Action Item:The XIV launch from IBM is potentially a very disruptive event, a fact which most storage vendors have ignored. The combination of a system built from standard industry components and SATA disks with IBM's purchasing power can be an explosive mixture, with the potential to cause a global chaos in storage prices. It will allow IBM sales to offer prices which will win every deal where price is the main criterion. XIV is, however, not Tier 1 product, it is missing several functions but despite that it has potential for replacing many aging high-end systems successfully. Rather, its performance and connectivity places it between today's Tier 1 and popular mid-range (tier 2) systems. Its price and the management simplicity promise low CapEx and lower OpEX, which are on high demand in the times of weak global economy. IBM's umbrella of company viability, global services, sales and flexible financing provide the infrastructure and customer security which XIV was missing as an independent company. It is worthwhile for every organization to evaluate XIV as a part of its non-mainframe storage procurement.

XIV - Simplicity and No More Tiers?

The Aug. 12 2008 announcement of IBM's XIV disk storage array is, in some ways, counter to other recent disk announcements but may foretell what some of the key disk storage ingredients for success will be in the future. By initially supporting up to 180, 1-terabyte SATA drives that spread disk volumes across all physical drives, several opportunities to simplify storage management present themselves:

- There are no distinct technology tiers such as flash, high-performance disk and power-up and power-down disk to configure and manage.

- All volumes are divided into 1MB partitions which are randomly distributed across all the disks and then spread across all 180 drives, reminiscent of single-level storage on the iSeries (formerly the AS/400.) This approach establishes a relative equilibrium and further simplifies many time consuming and often complex data placement and performance tuning chores, since customers simply can't influence much in this area as there aren't many knobs to turn.

- XIV claims mainframe, DFSMS-like, allocation levels far beyond the typical 30-45% utilization levels for non-mainframe systems. The question of "what applications are best suited for XIV" might be better stated as "what applications are not best suited for XIV", given this automatic approach to data allocation. As a result of this, XIV may usher in the long-awaited shift from a CAPEX to OPEX customer mentality as operational overheads appear to be minimal.

Action Item: XIV represents a "no more tiers" storage solution - at a time when storage tiers are quite popular. Don't try to fit XIV into a single level of the tiered storage hierarchy. XIV is a low-touch, hands off, don’t fool with it, disk storage system. If you trust IBM to do most of the management tasks transparently, XIV may be for you. With limited resources and mounting economic pressures, potential operating cost benefits could be significant. A deeper look at the potential benefits XIV offers is warranted.

XIV is a wakeup call for storage admin

The basic philosophy behind XIV is let the system manage complexity (e.g. data placement, performance tuning, etc). XIV’s virtualization approach that spreads data over all disks in the array is optimized for capacity utilization, performance, and storage management simplicity. Generally, 3PAR is accepted in the industry as the gold standard for ease-of-use, and it appears at least on paper that XIV is challenging 3PAR's leading status. While XIV is newer and doesn’t have the infrastructure built up around it that other entrants have, the trend in the storage industry is becoming clearer: ease-of-use, simplicity, and reduction in complex management tasks are increasingly in demand.

As CFOs pose questions like “Does IT matter?” and senior management is intensely focused on both CAPEX and OPEX efficiencies, it stands to reason that IT professionals who have built up skills around managing storage complexity should ask themselves: “How valuable will these skills be in five years?”

Some of the more cumbersome tasks that are necessary in many of today’s storage environments are things like LUN management and data placement. For example, when you want to create a LUN, you must specify where you want the LUN to be placed by finding contiguous space on the array that is large enough to handle the application. If that physical space runs out, you need to find a new location (more contiguous space) and move data around. This space needs to be defined, set up and ‘attached’ to the applications via a logical unit number (LUN) that is specific to an array and a volume which is mounted by an application. Storage admins need to understand the connection between the applications-->volumes-->devices. In addition, the admin needs to understand the ports through which an application accesses devices. This is critical because security practices dictate that data on a LUN can only be addressed by applications with requisite access rights.

These tasks are often deemed as onerous and require special knowledge, skills, and processes built up over many years. While there are tools and management systems that help facilitate these tasks (e.g. EMC’s Virtual LUNS), underneath the covers this is all going on, and storage admins need to have fairly specific knowledge of these dynamics in case something goes awry.

In theory, this all goes away with infrastructure like XIV. Storage pools are created for capacity isolation reasons, not performance. The storage admin does not plan the layout of the volumes relative to physical drive resources. Storage pools and volumes can be dynamically resized. LUN Maps define the open systems server and the LUNs to be accessed. Logical volumes can be added to or removed from any map dynamically. The reason is that everything is broken up, and the volume (a logical construct) is protected by its volume identifier, and you define what applications can address that volume. All security, path allocation, data placement, copy management, cache management, and everything else is dealt with by the ‘black box’ array OS. Again, in theory a customer has complete security, automation, data placement, performance tuning, free space allocation, etc. The drawback is the level below the logical construct cannot be accessed by the admin. If something goes wrong…you’re dead. This approach requires trust that everything is going to be managed correctly.

These issues apply not only for operations but also application development. If application design specifies THE highest performance – you will pay the price, perhaps unnecessarily. Organizations should avoid designing applications to require purpose-built storage unless it is absolutely justified.

Action Item: Organizations need to have earnest discussions about the business value of data management and decide where they want resources to be targeted. High performance mission critical applications may warrant traditional complexity however most information in the data center requires good enough performance and the greatest simplicity possible. Emerging architectures like 3PAR and XIV provide a glimpse of the future of storage management underpinned by removing complexity, not just hiding it.

Managing the Introduction of XIV

In this time of political campaigning, candidates are trying to define their opponents before they can introduce themselves. IBM has announced the XIV candidacy by stealth and has then kept quiet while its competition defines the product for it. To add to the confusion, Wikibon has learned that installations are hearing three sets of messages from IBM:

- The XIV evangelists, who say the XIV can do everything;

- The current storage specialist, who are trying to keep the XIV out, especially from DS8000 accounts;

- IBM storage marketing, who initially positioned the XIV as a Web 2.0 box.

The XIV is designed with a radical new architecture. It is new in the marketplace, with very few customers. Initial feedback from some of those customers has been good, but it is too early to be certain about the performance, availability, and storage management characteristics of the XIV, and what is storage management best practice for this architecture. There are no famous IBM Redbooks as yet.

One of the important areas of understanding is the performance of the XIV as it reaches a utilization of 85% or more of its maximum capacity with aggressive I/O rates. Storage administrators will need to know when the system will break and how to know when an XIV system hits the knee of the curve.

Action Item: Storage administrators should kick the tires for longer than usual with this array and focus on XIV performance characteristics. Storage executives should demand and read multiple Redbooks from IBM and ask for detailed workload characteristics, I/O rates, and response times from success stories. Oh… and storage executives should write up their experiences on Wikibon (or call me and I will write it up for them) so that the whole community can learn best practice about a potentially groundbreaking architecture.

IBM's XIV "No Tiers" - A Wakeup Call to the Industry?

Before IBM acquired it, XIV was a niche company with an interesting product. Now with the muscle of IBM, a low product cost, great features, and superior ease of use, IBM is well positioned to compete in many market segments where it did not have competitive products.

Competitors cannot afford to ignore this threat. But will users take lower capital costs and ease-of-use over greener products with lower operating costs? Clearly the success of innovators such as 3PAR and Compellent has shown how powerful a selling point is ease-of-use. Historically, however, ease-of-use has found broader acceptance in smaller shops – small in people, not storage capacity. But, today the market is larger and XIV is a good fit for clients who want to be able to rapidly grow capacity without managing multiple tiers of storage to increase performance and reduce cost.

Moreover, the industry has struggled for years with storage management approaches such as HSM, ILM and now tiered storage. IBM’s XIV consolidated utility storage does away with those approaches and this argument will likely be compelling to a large segment of the market particularly for fast growing, dynamic mixed, and emerging workloads

In addition, while there is much talk these days about Green Storage, many IT shops don’t even see the electric bill and do not have any green IT initiatives. And, IBM’s XIV provides high-speed performance while relying on lower-power SATA drives. What’s more, we think it likely that IBM will add a grouping capability aimed at being able to power off some drives.

Action Item: Vendors should not only focus on green storage, but green storage that is easy to use and is based on commodity hardware.

IBM XIV: Standard components vs. custom hardware

The IBM XIV consists of standard volume components - no hardware has been specifically designed to support storage arrays. The disk drives are standard one terabyte SATA devices. The processors and cache are standard Intel architecture. The Ethernet cards, FC and iSCSI ports are industry standard. There are three UPS’s designed to preserve cache integrity.

Lack of custom hardware in the XIV is mirrored by the lack of custom configurations. It is the model-T of storage arrays; it comes with 180 drives; if you want fewer, you still get 180 drives. You just get less space on all the 180 drives, and have to promise to buy the additional space soon. The only optional feature seems to be the power cord.

The use of purpose designed components is widespread in arrays. For example, DataDirect use special FRUs to allow a deep pipeline of data being read or written, and allow errors in that data to be corrected in flight without having to reread the data. The benefit is blistering sustained 6GB/sec read and write speeds, which is important to certain high-performance applications such as feeding supercomputers and movie making. The disadvantage is the development and maintenance cost of specialized hardware and the drag in the speed of adoption of new technology. Most other vendors use a similar custom approach to speeding up the hardware of storage arrays.

IBM is betting that that in the long run using standard components and putting development resources into software will allow the fastest evolution, fastest time to market and the overall most cost-effective solutions. The one great advantage of this approach (also taken by HP’s StorageWorks 9100 Extreme and EMC with Hulk) is a lower cost of storage. Early reports suggest that IBM is coming in at about $3-4 per gigabyte for block storage on the XIV, which is very competitive with modular storage for the functionality delivered.

- Using standard components requires additional hardware with higher power requirements than custom hardware

- Standard components will not produce the highest performance in any category

- To reach the highest levels of performance with standard components mandates a clustered controller approach, with additional overhead and complexity

These negative consequences are illustrated by the higher environmental costs as shown in Figure 1. This is a comparison of like configurations of 180 1TB disks with 120GB of cache; the assumption is that two centralized custom controllers with 120GB of centralized cache will perform about the same as the distributed commodity controllers and 120GB of distributed cache. In a RAID 1 environment, the space costs of the alternative arrays evaluated from 3PAR, EMC & Hitachi were higher because they took two frames (except for 3PAR). However, the cost of power and cooling is higher on the IBM XIV, and overall the four-year environmental cost is $18,500 higher over four years. The main reasons for this higher energy costs are commodity components requiring higher number of controllers, and three high-function UPS modules.

In a RAID 5 configuration, all the arrays required only one frame. Because fewer drives were required for RAID 5, all the alternative configurations had lower environmental costs, an average of $31,000 over four years. RAID 5 is not available on the IBM XIV, so RAID 1 was used in the comparison.

The arrays from 3PAR & Hitachi have similar functionality that would impact storage utilization (full virtualization, thin provisioning and virtual copies). The EMC arrays have thin provisioning functionality and more limited virtualization. The established arrays have richer functionality overall, especially in remote recovery.

Action Item: The key benefits of the IBM XIV are cost, simplicity and ease of use. The performance will usually be “good enough”. The environmental costs will be little higher than custom hardware. IT executives should include all these factors when evaluating the IBM XIV and other full virtualization systems. Wikibon believes that there will be many workloads were the additional environmental costs will be significantly outweighed by lower storage administration costs and faster time to implement change.

Footnotes: This evaluation was made using generic power specifications and are not directly indicative of the power consumption of the IBM XIV product. The analysis assumes like-to-like cache and SATA disk configurations and does not account for the potentially higher capacity utilization of XIV and possible higher performance using SATA-only configurations.

Action Item:

Footnotes: