Enhancing Cloud Services with Hybrid Storage

From Wikibon

| Line 98: | Line 98: | ||

[http://wikibon.org/profile/view/David%20Floyer David Floyer] | [http://wikibon.org/profile/view/David%20Floyer David Floyer] | ||

| + | |||

| + | Every storage vendor has made good money from proprietary tools that manage their own storage, whether that storage is a traditional array, traditional file, hybrid, flash-only, private cloud, or public cloud. Of course this software works best when 100% of the storage is managed by these proprietary tools. This approach just will not cut it as software-led infrastructure and software-led storage become established. An overall systems orchestration approach is going to be much more cost effective. | ||

| + | |||

| + | IT has specific roles to perform, whether the IT organization is centralized, distributed or a hybrid model. These include ensuring performance, reliability, recoverability, security and compliance of corporate systems, and corporate data. Just as with quality, businesses will define different organizational models of IT deployment, but the fundamental IT skills, tools, processes, and procedures need to be in place. IT, like quality, cannot be completely outsourced – it is too important. | ||

| + | |||

| + | The key to the success of software-led infrastructure is orchestration at the application or application suite level, ensuring that the correct compute, storage, networking, and business continuity resources are in place to meet the application owner's business objectives. The key is ensuring that resources can be managed and deployed by the orchestration layer, and the data available to the orchestration layer from the resource layers below is 100% of all the data available. A fundamental requirement is for every device to have APIs that allow discovery of all the data and metadata by the orchestration layer and for every device to be fully managed by orchestration APIs. | ||

| + | |||

| + | The orchestration layer should work across all types of resources. For example, storage should be available from all sources and types, internal & external, with the same interface and same SLA management including IOPS, capacity, latency, reliability, recoverability and security. Included in this orchestration should be the capability to meet the internal and external governance standards, and the charge-back or show back costs and projected future costs. | ||

| + | |||

| + | Increasing the level of deployment of in-house and private cloud in mega-datacenters that include cloud service providers and data aggregators relevant to their specific vertical industry will allow optimum systems integration and management within a mega-datacenter complex. Back-hauling data and metadata within a datacenter is an order of magnitude more effective and cost effective that attempting long-distance movement and management. | ||

| + | |||

| + | '''Action item: For enterprise IT, picking best-of-breed storage, CPU, or networking orchestration platforms is a short-term strategy that is unlikely to realize the full potential of software-led infrastructure. Enterprise IT should focus on system-level integration and pick an ecosystem and device suppliers that support Open APIs both to and from all devices. The ability to integrate legacy, private cloud and public cloud system resources will need a shift away from traditional resource stovepipes back to a systems view.''' | ||

Revision as of 17:34, 4 September 2013

Storage Peer Incite: Notes from Wikibon’s July 30, 2013 Research Meeting

Recorded video / audio from the Peer Incite:

When Utah-based colocator Voonami developed its IaaS offering, it found it needed higher performance storage. So naturally it called its incumbent provider, in this case NetApp, with whom it has had a long, happy relationship. However, said Voonami Sales Engineer Steve Newell in Wikibon's July 30 Peer Incite meeting, the all-disk solution that NetApp proposed was far beyond Voonami's budget. And NetApp seemed uninterested in listening to Voonami's needs, instead trying to dictate the expensive solution.

That sent the Voonami team on a quest for a solution that would meet the company's needs at an affordable price. Initially the team was wary of flash solutions, believing that they would be more expensive than disk. After several disappointing conversations with a variety of vendors, none of whom could meet the company's needs, the team heard of a hybrid flash storage startup called Tegile and called it as a last resort.

Somewhat to Voonami's surprise, Tegile's solution met or exceeded all Voonami's requirement, including its high IOPS spec, at a price that fit its budget. And Tegile worked with the team to design a solution that fit both its immediate and longer team needs.

Today Voonami still uses its legacy NetApp disk arrays, which continue to work well. But its IaaS service is built on Tegile, which has become its preferred vendor. The main reason for that change, Newell says, is that Tegile showed itself to be a vendor that Voonami could partner with for the long term, although he admits that cost issues were also important.

The lesson for CIOs here is do not presume that flash is the "Cadillac" solution that will always be more expensive. The best storage solution today depends on the requirements that need to be filled. Disk may still be the right solution for some applications where high performance is not important. But when you need high performance, look to either a hybrid system or, if the amount of data is within reason, an all flash array.Bert Latamore, Editor

Contents |

Enhancing Cloud Services with Hybrid Storage and Modern Infrastructure

Voonami

On July 30, 2013, the Wikibon community gathered to discuss the intersection of new infrastructure architectures and cloud computing with Utah-based Voonami. This service provider has two sites in Utah that provide collocation, hosting, and public and hybrid cloud managed services. Sharing his experiences was Steve Newell who after spending seven years in software development is now a sales engineer at Voonami, which has transitioned from being a pure collocation provider into offering multiple services.

Watch the video replay of this Peer Incite.

Building the Infrastructure to Deliver Cloud Services

The infrastructure that delivered Voonami’s public cloud offering was running into capacity and performance limits. The stack that made up the environment was NetApp storage, newly deployed Cisco UCS compute and VMware for the hypervisor, network virtualization, and vCloud Director. While the storage team was very happy with the features and functionality of the NetApp solution, the 20TB expansion would be cost prohibitive based on a disk-only solution.

The team was concerned that flash would be cost-prohibitive based on investigations a couple of years ago, so many options were considered including flash as a cache with NetApp and bids from a variety of other storage companies including EMC, HP, and Nimble. Voonami’s requirements were for high performance (specifically IOPS), 20TB usable capacity, multi-protocol support (both iSCSI and NFS) and a strong management solution that could give visibility into the solution. Most of the solutions either did not support NFS natively or charged extra for it. The storage team was also concerned about leaving NetApp snapshots and other functionality that they relied on.

Towards the end of the search, Voonami came across Tegile. Not only was the price lower than the alternatives, the hybrid flash architecture of Tegile provided such high performance that the storage administrator would no longer need to spend time allocating and optimizing the infrastructure based on application requirements, simplifying operations.

How Infrastructure Delivers Cloud Services for Users

The use of SDN allows each customer to have its own “virtual data center” including individual firewall and VPN. While VMware is the primary offering, Voonami also has created offerings based on both OpenStack (at both customer locations and public cloud) and Microsoft Hyper-V. Steve Newell commented that customers often don’t understand that cloud is not just another rack of infrastructure – they need to make changes in their architectures to take full advantage of services. A move to modular applications helps with this conversion, especially when the compute and load can each be managed separately and dynamically. Customer applications in Voonami’s cloud include Web farms, Linux/Apache stacks, lots of Windows Web stacks, hosted desktops and test/dev operations. Voonami can support remote replication between customer environments and cloud, where the Tegile Zebi storage array is used at both locations (details on the partnership).

Action item: Voonami’s advice to CIOs is that nobody knows your environment as well as you do. Don’t let vendors tell you what you need or what your pain points are. Too often companies are comparing the wrong metric rather than looking for the best real solution. Both the choice of infrastructure architectures and deployment (onsite, hosted, hybrid or public cloud) are changing rapidly, so users need to do a thorough due diligence at the next refresh or upgrade.

Wikibon research reinforces hybrid storage as optimal choice for mainstream CIOs

Introduction

Wikibon’s own David Floyer has written an incredibly detailed article entitled Hybrid Storage Poised to Disrupt Traditional Disk Arrays. This article details a comprehensive baseline which:

- Provides a common definition of what constitutes hybrid storage,

- Provides detailed cost comparisons that explain why true hybrid storage system are ultimately less expensive than their traditional storage brethren as the need for more IOPS grows,

- Explains the architecture that comprises hybrid storage systems, and,

- Identifies the point at which hybrid storage becomes the cost leader.

The cost/performance factor

For CIOs, ensuring that the storage selection meets operational workload needs at a reasonable cost is of paramount concern, particularly since storage can often consume a not insignificant percentage of the IT budget. For many CIOs, storage capacity has become an almost secondary concern. Certain workloads are able to leverage modern array features such as deduplication and compression technologies to great effect, thus reducing the need to worry as much about capacity as was necessary in the past. For example, VDI workloads, because of the great similarity in the virtual machines that make up the solution, can often achieve 75% or higher data reduction rates in production, practically solving the capacity issue.

So, for CIOs, the great storage capacity expansion question is easing, if only a little bit.

However, storage performance demands for modern workloads have emerged as the next great challenge. Historical workloads in mainstream IT shops have been able to rely on spinning disks to provide enough IOPS to meet workload needs, at least with the right planning. Organizations could choose from low IOPS 7200 RPM SATA disks or 15K RPM SAS disks, which provide about double the IOPS of the SATA disks. As workloads demanded more performance, CIOs could simply add more spinning disks, generally in the form of another disk shelf.

Capacity and performance decoupled

That was OK when capacity growth and performance demand were growing as one. Adding more disks both increased overall capacity and added more IOPS to the storage pool.

However, while capacity growth is still happening, modern workloads are much more IOPS hungry than has been seen in the past in mainstream IT. Further, storage performance woes are no longer visible to just IT. Consider VDI initiatives. If VDI is implemented in an organization with the wrong storage, the end result is directly experienced by frustrated users who become subjected to boot and login storms, which come to life when storage cannot keep up with demand in such situations.

Without a change in storage buying options, CIOs would be forced to buy more and more disks just to meet performance demands. We have seen organizations take drastic steps, such as short stroking, to meet performance demands, even though such techniques had a negative impact on capacity. So CIOs were seeing hand-in-hand capacity and performance increases happening at different rates, thus requiring strategies to balance the two needs while still attempting to balance the economics of the overall solution.

In order to improve performance, some vendors began adding flash-based storage to traditional storage systems and using these drives as a sort of cache in front of the hard disk. However, as David explains in his article, this has not always been the best option.

A solution emerges

As you might guess from the title of David’s article, hybrid storage solutions have emerged as the sweet spot for many modern mainstream workloads. Although IT shops still have to meet capacity needs, modern hybrid storage arrays generally provide adequate capacity and, when coupled with a variety of data reduction features – deduplication and compression – capacity needs can be easily met. Where hybrid storage arrays truly shine for CIOs is on the performance side of the equation.

For comparative purposes, a true hybrid solution is defined as one that takes a flash-first approach to data storage. I won’t repeat here the full text, but refer to the VM-aware Hybrid Definition section in David’s article for more information.

Evaluating Hybrid Suppliers

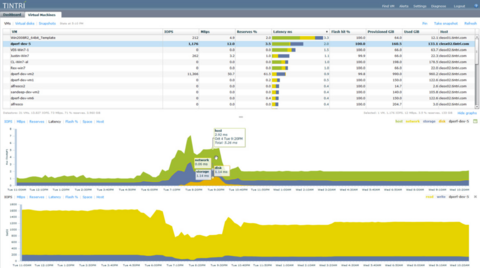

In his research for his article, David spoke with customers of several vendors. The methodology laid out in his article is geared to help customers evaluate important criteria of available solutions. The table below outlines how Tintri stacks up to the three primary characteristics that David outlined as being necessary for an offering to be considered a true hybrid solution.

| The IO queues for each virtual machine are fully reflected and managed in both the hypervisor and the storage array, with a single point of control for any change of priority. | Tintri provides complete end-to-end tracking and visualization of performance from both the hypervisor down and from the storage layer up, ensuring that administrators are able to procure critical statistics from whatever tool is in use. The goal is to ensure that storage performance remains at acceptable levels and reduces write latency. |

| All data is initially written to flash (flash-first). | Tintri’s solution takes a flash-first approach by ensuring that all data written to the storage array is initially written to high performance flash storage before being offloaded to slower rotational storage. This results in much higher levels of throughput, which is one of the key differentiators between hybrid storage and traditional arrays. |

| Virtual machine storage objects are mapped directly to objects held in the storage array. | Tintri VMstore feature is VM-aware associates the storage array directly to the virtual machines and critical business applications. |

David goes into great depth and demonstrates the point at which a hybrid storage array begins to outshine traditional storage arrays from a cost/IOPS perspective. In his analysis, David uses a scenario that requires 10 TB of usable capacity and demonstrates that, at the 7,000 IOPS mark, a hybrid system and a traditional disk system have about the same price from a performance perspective. Beyond that mark, the cost of the traditional disk system continues to escalate as spindles are added to continue growing performance while the hybrid continues to have plenty of excess IOPS capacity to meet continuing needs.

For a CIO, perhaps the most critical and succinct part of the analysis is as follows:

- An environment requiring 15,000 IOPS from 1 terabytes of usable storage would require:

- 64 drives and 1 TB of flash in a traditional storage array with a flash cache;

- 16 drives including 2.4TB of flash in a hybrid storage array;

- The traditional storage array would cost more than twice as much as the hybrid ($190,000 vs. $88,000).

Action item: CIOs who are considering expanding storage or replacing existing storage need to carefully consider the full range of options before them with particular attention paid to true hybrid solutions that maximize both IOPS and capacity needs to provide outstanding performance for mainstream and emerging workloads. By doing so, CIOs can avoid simply throwing more spindles at older systems and achieve much better results at much lower overall costs.

Is Orchestrating Storage or Systems the Right Approach

Every storage vendor has made good money from proprietary tools that manage their own storage, whether that storage is a traditional array, traditional file, hybrid, flash-only, private cloud, or public cloud. Of course this software works best when 100% of the storage is managed by these proprietary tools. This approach just will not cut it as software-led infrastructure and software-led storage become established. An overall systems orchestration approach is going to be much more cost effective.

IT has specific roles to perform, whether the IT organization is centralized, distributed or a hybrid model. These include ensuring performance, reliability, recoverability, security and compliance of corporate systems, and corporate data. Just as with quality, businesses will define different organizational models of IT deployment, but the fundamental IT skills, tools, processes, and procedures need to be in place. IT, like quality, cannot be completely outsourced – it is too important.

The key to the success of software-led infrastructure is orchestration at the application or application suite level, ensuring that the correct compute, storage, networking, and business continuity resources are in place to meet the application owner's business objectives. The key is ensuring that resources can be managed and deployed by the orchestration layer, and the data available to the orchestration layer from the resource layers below is 100% of all the data available. A fundamental requirement is for every device to have APIs that allow discovery of all the data and metadata by the orchestration layer and for every device to be fully managed by orchestration APIs.

The orchestration layer should work across all types of resources. For example, storage should be available from all sources and types, internal & external, with the same interface and same SLA management including IOPS, capacity, latency, reliability, recoverability and security. Included in this orchestration should be the capability to meet the internal and external governance standards, and the charge-back or show back costs and projected future costs.

Increasing the level of deployment of in-house and private cloud in mega-datacenters that include cloud service providers and data aggregators relevant to their specific vertical industry will allow optimum systems integration and management within a mega-datacenter complex. Back-hauling data and metadata within a datacenter is an order of magnitude more effective and cost effective that attempting long-distance movement and management.

Action item: For enterprise IT, picking best-of-breed storage, CPU, or networking orchestration platforms is a short-term strategy that is unlikely to realize the full potential of software-led infrastructure. Enterprise IT should focus on system-level integration and pick an ecosystem and device suppliers that support Open APIs both to and from all devices. The ability to integrate legacy, private cloud and public cloud system resources will need a shift away from traditional resource stovepipes back to a systems view.