Assessment of Automated Tiered Storage for Midrange Arrays

From Wikibon

Originating Authors: David Floyer and Nick Allen

In December 2011, Wikibon published research on Automated Tiered Storage (ATS) for Higher-end storage arrays, and concluded that ATS was cost-justified (reduced storage costs by about 25%) and ready for “prime time”. Wikibon found that a key element in the higher-end arrays was control over the environment. Most users, supported by early user experience, were not ready to allow the mission critical applications to be managed by a “black box”.

This follow-on research extends the original research to the midrange marketplace. Automation is more important for this market segment, and Wikibon

Contents |

WORK IN PROGRESS

PLEASE DO NOT EDIT OR TWEET UNTIL THIS BANNER IS DOWN

Executive Summary

Storage Arrays Analysed

Wikibon picked the single most popular platform from each of the major platforms to analyze. The intial list was:

- Dell Compellent using Data Progression

- EMC VNX using FAST VP ATS together with FastCache software

- Hitachi AMS

- Hitachi VSP using Dynamic Tiering ATS software

- HP 3PAR F-Class using Adaptive Optimization ATS software

- IBM Storwize 7000 using Easy Tier ATS software

- IBM XIV

- NetApp FAS Series

Three arrays were not analyzed because they did not offer an ATS solution. They were:

- Hitachi AMS

- Hitachi HUS system – this array family is new into the marketplace. Tiered storage is offered for file-based storage, but not initially for block-based storage. Wikibon expects ATS block-based storage solutions to be available in the future, and hopes to analyze them in the future.

- IBM XIV (Flash can act as a read-only cache. The fundamental architecture of the VIV which spreads two copies of data across all drives only allows a single tier of storage.)

- NetApp FAS Series (Flash acts as a read-only cache. NetApp offer manual movement of data between tiers, but have been resistant to providing an automated tiered storage solution)

The Hitachi VSP and IBM Storwize 7000 were analyzed in the previous research. This time the weightings and the comparison categories and questions were all slightly different.

ATS Evaluation Criteria & Methodology

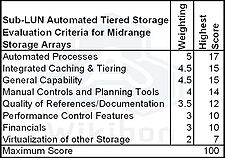

Wikibon looked at the ATS functionality for midrange arrays required for typical midrange workloads. Wikibon talked extensively to Wikibon members that had implemented or were implementing ATS for mission critical workloads and other workloads. As with the Tier 1 customer that Wikibon talked to, Wikibon found there was high activity and expectation that they would deploy ATS. Wikibon discussed it's members the importance functionality requirements for the midrange. Table 1 gives the evaluation criteria functionality groups that Wikibon developed and used in this analysis, and the relative weightings. Because midrange ATS is often implemented is data centers with limited technical resources, high weightings were given to automation, integration and planning capabilities. Less weighting was given to general capabilities and financials. Table 1 also shows that maximum score that is available within each criteria, with the overall maximum score being set at 100.

Wikibon expects that as products mature and understanding increases with adoption, the evaluation criteria and weightings will change. The requirements and criteria for ATS on other storage array tiers will be different, and it would be misleading to apply the findings of this study to other workloads/storage tiers.

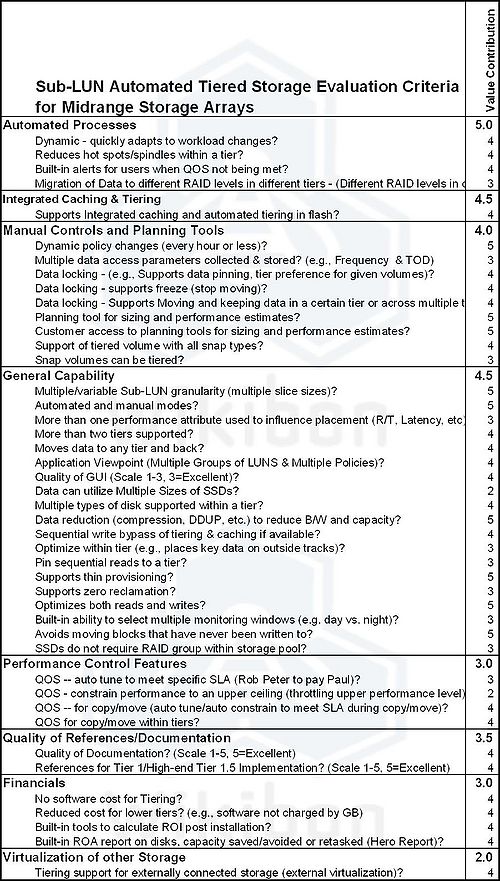

Within each of the evaluation criteria are specific functionalities, as shown in table 2, together with the relative importance within the criterion on a 5 point scale. All of these functionalities are assessed for each of the storage arrays as available/not available (Y/N), except for questions on Quality of GUI and data locking, which are assessed on a three point scale and two questions on quality of documentation and references, which are assessed on a five point scale.

The average weighted score is then summed for each evaluation criterion, and then normalized to a maximum overall score of 100 (See Footnote 1 for additional details on the methodology).

Action Item:

Footnotes:

Footnote 1: This table is the source data for Figure 1. The data is as of May 2012, and is the result of detailed analysis performed by Wikibon analysts. The scope of the analysis is the available (GA) sub-LUN ATS software alone, and does not include other vendor or third-party software. The questions were designed to be Y/N questions, with the exceptions of questions on GUI quality and data locking (1-3 scale), and questions on references and quality of documentation (both 1-5 scales). These scores translate into 1 for a "Y", and 0 for a "N" for Y/N questions, and a linear scale for the scale questions where the lowest score is 0 and the highest score is 1. The average weighted score within each the main evaluation criteria is calculated relative the maximum total score shown in Table 1. These scores are shown in the table at the bottom of figure 1, together with the overall total across all the criteria.