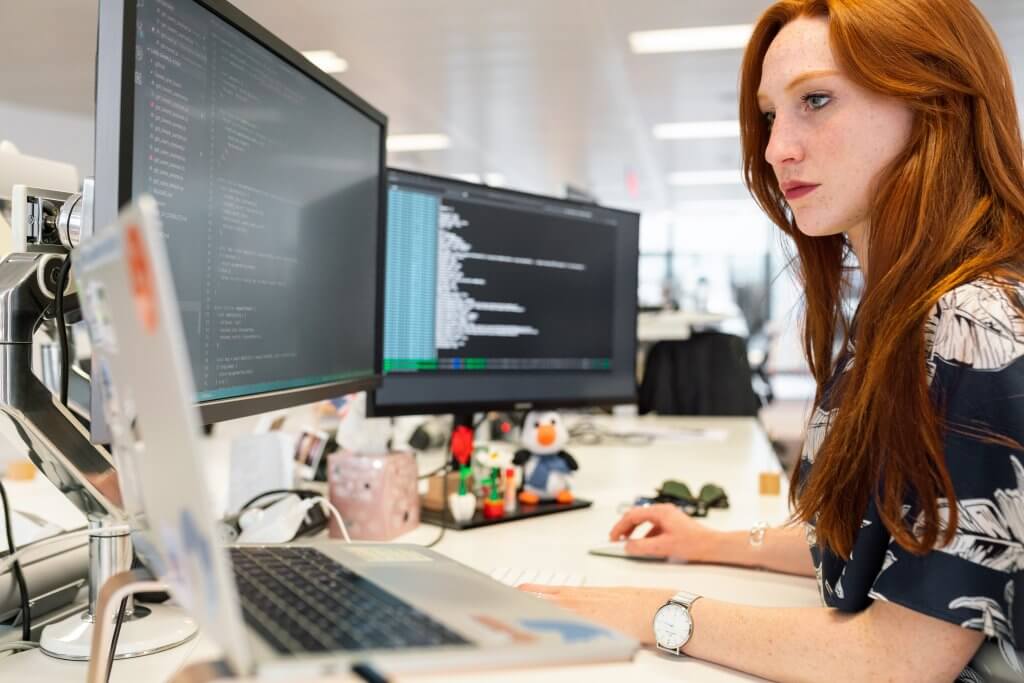

Where Tech Executives Gain Real-Time Insights + Expert Advice

Preparing today’s leaders with transformative insights, customer data, AI and modern media. What can we do for your business?

See How Technology Leaders Leverage theCUBE Research and Insights

Competitive Intelligence, Market Analysis + Trend Tracking

- Partner with experienced strategy, product, financial, technical and brand experts to guide decisions.

- Get direct access to time-sensitive research data to see changing trends and identify patterns across dozens of markets and thousands of companies.

- Gain a deeper understanding of audience behavior, more accurately target your marketing investments, realize quicker time to value from those investments.

Personalized Advice Based on Research Data and Customized Reports

- Expert analysis

- Buyer behavior data

- Sentiment analysis

- Customer spending intentions

- Time series data

- Audience metrics

- Custom research

Independent Thought Leadership, Partnerships and AI Tools

- Rapidly create, manage + distribute content with theCUBE AI

- Personalized messaging for go-to-market pros

- Leverage our networks to amplify your brand

Explore Our Coverage Areas

Latest Research Insights

228 | Breaking Analysis | Security budgets are growing but so is vendor sprawl

David Vellante

April 27, 2024

Extreme Connects with Forward-Looking Customers

Bob Laliberte

April 26, 2024

SMBs and Cyber Risk Management: How to Up the Cyber Protection Game

Shelly Kramer

April 25, 2024

Cradlepoint Launches NetCloud SASE to Serve and Secure Agile Enterprises

Shelly Kramer

April 24, 2024

Recapping Enterprise Connect and Adobe Summit Highlights

Shelly Kramer

April 21, 2024

Microsoft Copilot for Microsoft 365 — What’s Ahead for Law Firms

Shelly Kramer

April 20, 2024

Unpacking Zscaler ThreatLabz 2024 AI Security Report

Shelly Kramer

April 20, 2024

227 | Breaking Analysis | How chasing AI shifts tech spending patterns

David Vellante

April 20, 2024

Our executive leadership averages 26 years of experience in the industry.